Our next article is about improving the camera support in the Maze sample.

The main problem is I like features of both camera types in DXUT. I want to combine the behavior of CModelViewerCamera and CMazeFirstPersonCamera. The behavior of CMazeFirstPersonCamera is to enable standard keyboard control of the camera, while the behavior of CModelViewerCamera is to enable mouse rotation and mouse wheel control.

I want both. The least destructive way to do this is to add a new subclass of the DXUT classes, and mingle the code there without changing DXUT.

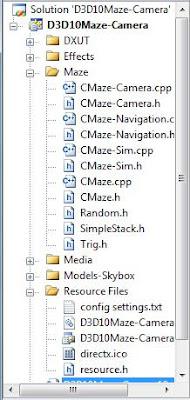

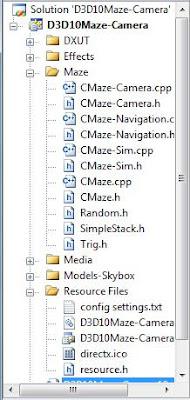

The Camera Project

Here is a screenshot of the updated project, with the added CMaze-Camera source/header file pair added

Solution Window

We’ll cover the changes in 3 sections: New Camera Class, Callback Changes, and Conclusion.

New Camera Class

Updating the camera controls amounts to merging the FrameMove method. I will perform a little information hiding and make integrating the camera a bit easier, but the basic idea is to take what both do and merge it.

With that in mind, I will start with the CFirstPersonCamera class and make a subclass:

class CMazeFirstPersonCamera : public CFirstPersonCamera

{

public:

D3DXMATRIX mCameraRot;

D3DXMATRIX mCameraTrans;

void SetYaw(float fYaw) { m_fCameraYawAngle = fYaw; }

void SetEye(D3DXVECTOR3 vEye ) { m_vEye = vEye; }

void InternalFrameMove(FLOAT fElapsedTime);

virtual void FrameMove(FLOAT fElapsedTime);

D3DXMATRIX Render10(ID3D10Device* pd3dDevice);

D3DXMATRIX Render9(IDirect3DDevice9* pd3dDevice);

};

The FrameMove method invokes InternalFrameMove, which does the heavy lifting, and then prepares some matrices for later use.

void CMazeFirstPersonCamera::FrameMove(FLOAT fElapsedTime)

{

//CFirstPersonCamera::FrameMove(fElapsedTime);

InternalFrameMove(fElapsedTime);

// Make a rotation matrix based on the camera's yaw & pitch

D3DXMatrixRotationYawPitchRoll( &mCameraRot, m_fCameraYawAngle, 0, 0 );

//make translation matrix based on eye pos

D3DXMatrixTranslation( &mCameraTrans,-m_vEye.x,-m_vEye.y,-m_vEye.z);

}

InternalFrameMove is the CFirstPersonCamera implementation with 2 changes.

1st I uncomment

//// Get the mouse movement (if any) if the mouse button are down

if((m_nActiveButtonMask & m_nCurrentButtonMask)m_bRotateWithoutButtonDown)

UpdateMouseDelta( );//fElapsedTime );

To get the mouse delta, and remove the parameter that is no longer needed.

2nd, after

// Move the eye position

m_vEye += vPosDeltaWorld;

I insert

//update by mousewheel

if( m_nMouseWheelDelta )//&& m_nZoomButtonMask == MOUSE_WHEEL )

{

float m_fRadius; // Distance from the camera to model

m_fRadius = 5.0f;

m_vVelocity.z = 10.0f*(m_nMouseWheelDelta*m_fRadius*0.1f/12.0f);

m_nMouseWheelDelta = 0;

// Simple euler method to calculate position delta

D3DXVECTOR3 vPosDelta = m_vVelocity * fElapsedTime;

// Transform vectors based on camera's rotation matrix

D3DXVECTOR3 vWorldUp, vWorldAhead;

D3DXVECTOR3 vLocalUp = D3DXVECTOR3( 0, 1, 0 );

D3DXVECTOR3 vLocalAhead = D3DXVECTOR3( 0, 0, 1 );

D3DXVec3TransformCoord( &vWorldUp, &vLocalUp, &mCameraRot );

D3DXVec3TransformCoord( &vWorldAhead, &vLocalAhead, &mCameraRot );

// Transform the position delta by the camera's rotation

D3DXVECTOR3 vPosDeltaWorld;

D3DXVec3TransformCoord( &vPosDeltaWorld, &vPosDelta, &mCameraRot );

m_vEye += vPosDeltaWorld;

}

Before

if( m_bClipToBoundary )

ConstrainToBoundary( &m_vEye );

From CModelViewerCamera in order to take the mouse delta and convert it into camera movement.

Et voila. Now I have a camera that responds to both sets of controls, mouse and keyboard.

Callback changes

Since we know have a unified camera, we can define our camera variables to be of the same type:

CMazeFirstPersonCamera g_SkyCamera; // A FP camera

CMazeFirstPersonCamera g_SelfCamera; // A fp camera

The only callback changes are in the render code and the key handling code.

For OnD3D10FrameRender and D3D10, the code for each camera is now the same, since they are of the same type.

//proj

mProj = *g_SkyCamera.GetProjMatrix();

//view

if ( g_bSelfCam )

{

mView = *g_SelfCamera.GetViewMatrix();

mViewSkybox = mView;

}

else if ( g_bSkyCam )

{

mView = *g_SkyCamera.GetViewMatrix();

mViewSkybox = mView;

}

For OnD3D9FrameRender and D3D9, the code for each camera is now also the same, since they are of the same type.

//proj

mProj = *g_SkyCamera.GetProjMatrix();

//view

if ( g_bSelfCam )

{

mView = *g_SelfCamera.GetViewMatrix();

mViewSkybox = mView;

}

else if ( g_bSkyCam )

{

mView = *g_SkyCamera.GetViewMatrix();

mViewSkybox = mView;

}

Now we update the key handler to take input for the maze camera.

//---------------------------------------------

// Handle messages to the application

//---------------------------------------------

LRESULT CALLBACK MsgProc( HWND hWnd, UINT uMsg, WPARAM wParam, LPARAM lParam,

bool* pbNoFurtherProcessing, void* pUserContext )

{

// Pass messages to sky camera so it can respond to user input

g_SkyCamera.HandleMessages( hWnd, uMsg, wParam, lParam );

// Pass messages when paused to self camera so it can respond to input

if ( !g_bRunSim )

g_SelfCamera.HandleMessages( hWnd, uMsg, wParam, lParam );

return 0;

}

The 2nd part, sending input to g_SelfCamera, is the new part and is the part an alert reader brought up a few articles ago, so we have closed that loop. Note we only do this when paused, since the self camera is being modified by the solver when the sim is not paused. One writer per variable at a time is a good rule.

And that is it! Very simple yet effective. Now the mouse can be used to turn the camera, the mouse wheel can be used to move the position in-out, and the keyboard keys can be used to move the position.

No other callback changes are required. Sweet!

Conclusion

This builds on the previous article. Now we have a skybox rendering, a maze generating and rendering, a a simple solver for the maze, and camera controls.

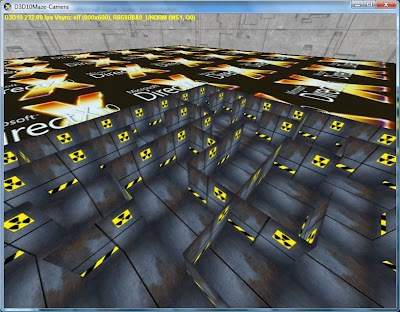

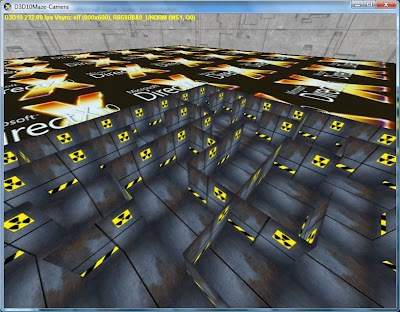

For this article there is only a single screenshot showing a new camera position, this was a combination of mouse rotations, mouse wheel clicks, and keyboard keys.

Using the new camera class

Next up in the series are:

· an animated character rendered at the solver location,

· additional maze rendering features, and

· generating a new maze when the current one is full solved.

I am still working on the code for maze goals, the rendering I want to do for the goals has held me up a bit so when I get that complete I will add it to the series.

Posts

Posts