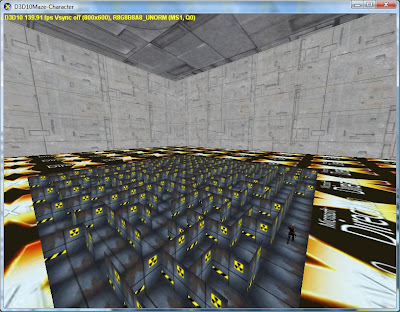

Figure 1: the animated character in the maze.

be some life in the maze. This is the first in a series of steps making the maze rendering more interesting.

The DX SDK includes a model with skinning information for D3D9 and D3D10, but uses a different model for each. I’ll do the same, and thus the floor texture of the skybox and the character will provide visual a clue as to which API is being used.

One other feature of the sample will be some use of the DXUT UI controls to allow manipulating the speed of the animation playback.

The Character Project

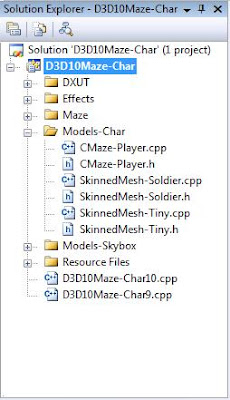

Here is a screenshot of the updated project, with the added folders for the two characters:

Figure 2:Solution Window

We’ll cover the changes in 4 sections: New Classes for Characters, Callback Changes, UI, and Conclusion.

New Classes for Characters

We have 3 new classes in 3 header/source pairs - CMaze-Player, SkinnedMesh-Soldier,and SkinnedMesh-Tiny. It should be clear that SkinnedMesh-Soldier and SkinnedMesh-Tiny map to the code to load the D3D10 skinned character (soldier) and the D3D9 skinned character (tiny).

The treatment here follows from my 2003 article on flipcode.com, “Modular D3D SkinnedMesh - Towards A Better Modularity Within The D3D SkinnedMesh Sample” located here.

The idea is to encapsulate the code in the SDK so that it’s easier to pick it up and reuse. So what then is in CMaze-Player? That is a class to encapsulate dealing with skinned meshes and D3D9 versus D3D10 so the main body of the code is a bit cleaner.

Let’s start with CMazePlayer and see how we make the D3D9 and D3D10 code-path read easier. Here is the class definition in CMaze-Player.h:

class CMazePlayer

{

public:

CMazePlayer();

~CMazePlayer();

//mini-API for D3D10

HRESULT LoadPlayer10(ID3D10Device* pd3dDevice);

void UpdatePlayer10(float fTime);

void RenderPlayer10(ID3D10Device* pd3dDevice);

void UnloadPlayer10(ID3D10Device* pd3dDevice);

//mini-API for D3D9

HRESULT LoadPlayer9(IDirect3DDevice9* pd3dDevice,

const D3DSURFACE_DESC* pBackBufferSurfaceDesc);

void UpdatePlayer9(float fTime, float fElapsedTime);

void RenderPlayer9(IDirect3DDevice9* pd3dDevice,

float fTime, float fElapsedTime);

void UnloadPlayer9(IDirect3DDevice9* pd3dDevice);

//extra resource management for D3D9

HRESULT OnResetDevice9(IDirect3DDevice9* pd3dDevice,

const D3DSURFACE_DESC* pBackBufferSurfaceDesc);

void OnLostDevice9();

};

What we see is there is what I call a “mini-API”, eg a convention we adopt and structure our code around.

The mini-API identifies 4 key actions:

Load

Update

Render

Unload

for both D3D10 and D3D9.

D3D9 requires 2 additional methods:

OnResetDevice

OnLostDevice

To handle the extra resource management required.

What do these methods do?

The Load methods hide the details of loading the skinned character during the OnCreateDevice event.

HRESULT CMazePlayer::LoadPlayer10(ID3D10Device* pd3dDevice)

{

HRESULT hr;

//create device dependent objects

g_SkinnedMesh10.OnCreateDevice( pd3dDevice );

return S_OK;

}

HRESULT CMazePlayer::LoadPlayer9(IDirect3DDevice9* pd3dDevice,

const D3DSURFACE_DESC* pBackBufferSurfaceDesc)

{

HRESULT hr;

g_SkinnedMesh9.OnCreateDevice(pd3dDevice,pBackBufferSurfaceDesc);

return S_OK;

}

The Update methods hide the details of animating the character during the OnFrameMove event.

void CMazePlayer::UpdatePlayer10( float fTime)

{

D3DXVECTOR3 vLightPos;

g_SkinnedMesh10.OnFrameMove( vLightPos, fTime, 0 );

}

void CMazePlayer::UpdatePlayer9( float fTime,float fElapsedTime)

{

D3DXVECTOR3 mSkinPos;

g_SkinnedMesh9.OnFrameMove(mSkinPos,

fTime,fElapsedTime);

}

The Render methods hide the details of drawing the character during the OnFrameRender event.

void CMazePlayer::RenderPlayer10(ID3D10Device* pd3dDevice)

{

D3DXVECTOR3 g_vLightPos;

g_SkinnedMesh10.OnFrameRender( pd3dDevice,0,0,g_vLightPos );

}

void CMazePlayer::RenderPlayer9(IDirect3DDevice9* pd3dDevice,

float fTime, float fElapsedTime)

{

D3DXVECTOR3 g_vLightPos;

g_SkinnedMesh9.OnFrameRender(pd3dDevice,fTime,0,g_vLightPos);

}

The Unload methods hide the details of animating the character during the OnDestroyDevice event.

void CMazePlayer::UnloadPlayer10(ID3D10Device* pd3dDevice)

{

g_SkinnedMesh10.OnDestroyDevice();

}

void CMazePlayer::UnloadPlayer9(IDirect3DDevice9* pd3dDevice)

{

g_SkinnedMesh9.OnDestroyDevice();

}

For D3D9, The OnReset method and OnLost method hide the additional details of handling the character objects resource management during the OnResetDevice and OnLostDevice event.

HRESULT CMazePlayer::OnResetDevice9(IDirect3DDevice9* pd3dDevice,

const D3DSURFACE_DESC* pBackBufferSurfaceDesc)

{

HRESULT hr;

g_SkinnedMesh9.OnResetDevice(pd3dDevice,pBackBufferSurfaceDesc);

return S_OK;

}

void CMazePlayer::OnLostDevice9()

{

g_SkinnedMesh9.OnLostDevice();

}

How do we use these methods? We’ll see that in the Callback Changes section.

What happens down in the Tiny and Soldier classes?

The DX SDK documentation for the Tiny sample, and my flipcode.com article go over in more detail than I have time for and if you want to understand the nitty-gritty, go there.

I will point out that for the Soldier code, I have collapsed the more general SDK sample code and only use the constant buffer skinning method. Constant buffer skinning is the most like the traditional Direct3D 9-style GPU-based skinning. Transformation matrices are loaded into shader constants, and the vertex shader indexes these based upon the bone index in the vertex stream. Like most Direct3D 9 implementations, the number of bone indices per vertex is limited to 4. Unlike most Direct3D 9 implementations, the skinning method that is used in this sample does not create a palette of matrices, nor does it divide the mesh into sections by vertices that share like indices. This is because Direct3D 10 allows constant buffers to be much larger than Direct3D 9 constant buffers. Only for meshes with several hundred bones would splitting the bone matrices into palettes be a necessity. The code for indexing the bones in the shader is as follows:

mret = g_mConstBoneWorld[ iBone ];

Now we are ready for the callback changes.

Callback changes

At OnCreateDevice time we call the LoadPlayer method for that D3D version.

HRESULT CALLBACK OnD3D10CreateDevice( ID3D10Device* pd3dDevice, const DXGI_SURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

…

//load skinned character

g_SkinnedPlayer.LoadPlayer10(pd3dDevice);

…

}

HRESULT CALLBACK OnD3D9CreateDevice( IDirect3DDevice9* pd3dDevice, const D3DSURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

…

//load skinned character

g_SkinnedPlayer.LoadPlayer9(pd3dDevice);

…

}

At OnFrameMove time, since the framework treats it as API independent, we cannot invoke the UpdatePlayer method. So we skip that one here.

At OnFrameRender time we call the RenderPlayer method for that D3D version:

void CALLBACK OnD3D10FrameRender( ID3D10Device* pd3dDevice, double fTime, float fElapsedTime, void* pUserContext )

{

…

//move self character

g_SkinnedPlayer.UpdatePlayer((float)fTime);

//render self character

g_SkinnedPlayer.RenderPlayer10(pd3dDevice);

…

}

void CALLBACK OnD3D9FrameRender( IDirect3DDevice9* pd3dDevice, double fTime, float fElapsedTime, void* pUserContext )

{

…

//move self character

g_SkinnedPlayer.UpdatePlayer9((float)fTime,(float)fElapsedTime);

//render self character

g_SkinnedPlayer.RenderPlayer9(pd3dDevice,

(float)fTime,(float)fElapsedTime);

…

}

The OnFrameRender calls invoke the UpdatePlayer method because the framework treats the OnFrameMove method as API independent when it often isn’t.

At OnDestroyDevice time we call the UnloadPlayer method for that D3D version:

void CALLBACK OnD3D10DestroyDevice( void* pUserContext )

{ …

//skinned character

g_SkinnedPlayer.UnloadPlayer10(NULL);

…

}

void CALLBACK OnD3D9DestroyDevice( void* pUserContext ){

…

//skinned character

g_SkinnedPlayer.UnloadPlayer9(NULL);

…

}

D3D9 needs a little help with the resources that cannot persist through a device change. There a Reset and OnLost method provide the heavy lifting.

HRESULT CALLBACK OnD3D9ResetDevice( IDirect3DDevice9* pd3dDevice, const D3DSURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

…

//skinned character

g_SkinnedPlayer.OnResetDevice9(pd3dDevice,pBackBufferSurfaceDesc); …

}

void CALLBACK OnD3D9LostDevice( void* pUserContext )

{ …

//skinned character

g_SkinnedPlayer.OnLostDevice9()

…

}

And that is it! We now have the animated character in the maze, representing the “self camera” and solver location. The 2nd camera, the”skycam” provide us a remote observer position to view the rendering.

Here are 2 FRAPS movie captures to show the animated character in both D3D10 and D3D9, since a static image doesn’t do it justice.

D3D10 movie

D3D9 movie

UI

One change with this sample is to use the UI controls provided by DXUT. These UI controls are rendered and interacted with in 3D, a true 3D UI. This means the UI never leaves the 3D pipeline, which in general should be more performant than flushing the pipeline to render the UI in 2D.

We use DXUT UI objects to provide the 3D UI and mode control and app UI controls. The mode control to allow toggling full screen mode, toggling use of the REF reference rasterizer device, and switching device control is exactly what the standard DX SDK samples provide. In addition, I provide a control to allow changing the divisor for the animation speed. Let’s take a look at how that is done.

Basic UI setup

The changes start in wWinMain. We also add new App callbacks for the UI and have to add handling code in the existing App callbacks.

In wWinMain, we declare our UI variables:

//3d hud ui

CDXUTDialogResourceManager g_DialogResourceManager;

CD3DSettingsDlg g_D3DSettingsDlg;

CDXUTDialog g_HUD;

CDXUTDialog g_SampleU;

CDXUTDialog g_SampleU;

BOOL g_bShowUI = false;

We add an InitApp method to hide the code to create the UI.

void InitApp();

And we change wWinMain to call InitApp

int WINAPI wWinMain( HINSTANCE hInstance, HINSTANCE hPrevInstance, LPWSTR lpCmdLine, int nCmdShow )

{

…

// Perform any application-level initialization here

DXUTSetIsInGammaCorrectMode(false);

InitApp();

…

}

InitApp gets our UI going.

void InitApp()

{

g_D3DSettingsDlg.Init( &g_DialogResourceManager );

g_HUD.Init( &g_DialogResourceManager );

g_SampleUI.Init( &g_DialogResourceManager );

We add an InitApp method to hide the code to create the UI.

void InitApp();

And we change wWinMain to call InitApp

int WINAPI wWinMain( HINSTANCE hInstance, HINSTANCE hPrevInstance, LPWSTR lpCmdLine, int nCmdShow )

{

…

// Perform any application-level initialization here

DXUTSetIsInGammaCorrectMode(false);

InitApp();

…

}

InitApp gets our UI going.

void InitApp()

{

g_D3DSettingsDlg.Init( &g_DialogResourceManager );

g_HUD.Init( &g_DialogResourceManager );

g_SampleUI.Init( &g_DialogResourceManager );

g_HUD.SetCallback( OnGUIEvent );

int iY = 10;

g_HUD.AddButton( IDC_TOGGLEFULLSCREEN, L"Toggle full screen", 35, iY, 125, 22 );

g_HUD.AddButton( IDC_TOGGLEREF, L"Toggle REF (F3)", 35, iY += 24, 125, 22, VK_F3 );

g_HUD.AddButton( IDC_CHANGEDEVICE, L"Change device (F2)", 35, iY += 24, 125, 22, VK_F2 );

g_SampleUI.SetCallback( OnGUIEvent );

WCHAR sz[100];

StringCchPrintf( sz, 100, L"Animation Divisor: %0.2f", g_fModelAnimSpeed );

g_SampleUI.AddStatic( IDC_ANIMSPEED_STATIC, sz, 35, iY += 24, 125, 22 );

g_SampleUI.AddSlider( IDC_ANIMSPEED_SCALE, 50, iY += 24, 100, 22, 1, 100, ( int )( g_fModelAnimSpeed)*10 );// * 100.0f ) );

}

We see that InitApp calls the Init methods on the CD3DSettingsDlg object g_D3DSettingsDlg, the CDXUTDialog object g_HUD, and the CDXUTDialog object g_SampleUI. These all init the DXUTDialogResourceManager object g_DialogResourceManager.

int iY = 10;

g_HUD.AddButton( IDC_TOGGLEFULLSCREEN, L"Toggle full screen", 35, iY, 125, 22 );

g_HUD.AddButton( IDC_TOGGLEREF, L"Toggle REF (F3)", 35, iY += 24, 125, 22, VK_F3 );

g_HUD.AddButton( IDC_CHANGEDEVICE, L"Change device (F2)", 35, iY += 24, 125, 22, VK_F2 );

g_SampleUI.SetCallback( OnGUIEvent );

WCHAR sz[100];

StringCchPrintf( sz, 100, L"Animation Divisor: %0.2f", g_fModelAnimSpeed );

g_SampleUI.AddStatic( IDC_ANIMSPEED_STATIC, sz, 35, iY += 24, 125, 22 );

g_SampleUI.AddSlider( IDC_ANIMSPEED_SCALE, 50, iY += 24, 100, 22, 1, 100, ( int )( g_fModelAnimSpeed)*10 );// * 100.0f ) );

}

We see that InitApp calls the Init methods on the CD3DSettingsDlg object g_D3DSettingsDlg, the CDXUTDialog object g_HUD, and the CDXUTDialog object g_SampleUI. These all init the DXUTDialogResourceManager object g_DialogResourceManager.

Then we set the OnGUIEvent callback for the g_HUD object, so we get the callbacks for the mode controls. Then we add the buttons for toggling full screen, the REF device, and changing the active device. Then we add a static text control and a slider or scrollbar for the animation divisor variable. More on those in a moment. Then we set the OnGUIEvent callback for the g_SampleUI object, so we get the callbacks for the slider.

UI Event Callback

We see that InitApp calls OnGUIEvent for its event handling for both the mode controls and the app controls, in this case the slider.

void CALLBACK OnGUIEvent( UINT nEvent, int nControlID, CDXUTControl* pControl, void* pUserContext )

{

switch( nControlID )

{

case IDC_TOGGLEFULLSCREEN:

DXUTToggleFullScreen(); break;

case IDC_TOGGLEREF:

DXUTToggleREF(); break;

case IDC_CHANGEDEVICE:

g_D3DSettingsDlg.SetActive( !g_D3DSettingsDlg.IsActive() );

break;

case IDC_ANIMSPEED_SCALE:

{

WCHAR sz[100];

g_fModelAnimSpeed = ( float )( g_SampleUI.GetSlider( IDC_ANIMSPEED_SCALE )->GetValue() * 0.1f);// * 0.01f );

StringCchPrintf( sz, 100, L"Animation Divisor: %0.2f", g_fModelAnimSpeed );

g_SampleUI.GetStatic( IDC_ANIMSPEED_STATIC )->SetText( sz );

break;

}

}

}

For the mode, REF, and device changing functionality we reuse what DXUT gives us.

UI Event Callback

We see that InitApp calls OnGUIEvent for its event handling for both the mode controls and the app controls, in this case the slider.

void CALLBACK OnGUIEvent( UINT nEvent, int nControlID, CDXUTControl* pControl, void* pUserContext )

{

switch( nControlID )

{

case IDC_TOGGLEFULLSCREEN:

DXUTToggleFullScreen(); break;

case IDC_TOGGLEREF:

DXUTToggleREF(); break;

case IDC_CHANGEDEVICE:

g_D3DSettingsDlg.SetActive( !g_D3DSettingsDlg.IsActive() );

break;

case IDC_ANIMSPEED_SCALE:

{

WCHAR sz[100];

g_fModelAnimSpeed = ( float )( g_SampleUI.GetSlider( IDC_ANIMSPEED_SCALE )->GetValue() * 0.1f);// * 0.01f );

StringCchPrintf( sz, 100, L"Animation Divisor: %0.2f", g_fModelAnimSpeed );

g_SampleUI.GetStatic( IDC_ANIMSPEED_STATIC )->SetText( sz );

break;

}

}

}

For the mode, REF, and device changing functionality we reuse what DXUT gives us.

For the slider, we get the value, use that to set our app variable, then update the static text with the new value using DXUT methods for slider and static controls. This part of DXUT is useful and certainly shows how to use D3D rendering to produce UI.

Note variable g_fModelAnimSpeed. We use this in the skinned mesh animation calls to update the animation clock:

void CALLBACK CSkinnedMesh10::OnFrameMove( D3DXVECTOR3 vSelfPos,

double fTime, float fElapsedTime )

{

D3DXMATRIX mIdentity;

D3DXMatrixIdentity( &mIdentity );

g_SkinnedMesh10.TransformMesh( &mIdentity, fTime/g_fModelAnimSpeed );

}

void CALLBACK CSkinnedMesh9::OnFrameMove( D3DXVECTOR3 vSelfPos,

double fTime, float fElapsedTime )

{

…

if( g_pAnimController != NULL )

g_pAnimController->AdvanceTime( fElapsedTime/g_fModelAnimSpeed, NULL );

UpdateFrameMatrices( g_pFrameRoot, &mWorld);

}

And use the divisor to control the animation rate. The range of the control is 0.1 to 10. So we go from 10x slower to 10x faster. Normally the time tick that DXUT provides is what drives the animation clock. This is probably incorrect. We probably want a separate tick, at 30 or 60 ticks a second, to drive the animation at a standard rate independent of the frame rate. And then use the divisor there to slow down or speed up the animation clock.

For now using the simple divisor given by the slider variable let’s us fiddle with it even if it isnt correct. Perhaps in a future article when we have more to animate and want to be closer to correct I will explore clocks and a clock system to drive differing animations at differing speeds.

App Callbacks

We’ll need to add handling to several App callbacks.

In OnCreateDevice:

HRESULT CALLBACK OnD3D10CreateDevice( ID3D10Device* pd3dDevice, const DXGI_SURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

//3d hud

V_RETURN( g_DialogResourceManager.OnD3D10CreateDevice( pd3dDevice ) );

V_RETURN( g_D3DSettingsDlg.OnD3D10CreateDevice( pd3dDevice ) );

…

}

HRESULT CALLBACK OnD3D9CreateDevice( IDirect3DDevice9* pd3dDevice, const D3DSURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

HRESULT hr;

//ui

V_RETURN( g_DialogResourceManager.OnD3D9CreateDevice( pd3dDevice ) );

V_RETURN( g_D3DSettingsDlg.OnD3D9CreateDevice( pd3dDevice ) );

…

}

We call into the g_DialogResourceManager and the g_D3DSettingsDlg to update the objects with the device and create any device dependent objects.

In OnResetDevice:

HRESULT CALLBACK OnD3D10ResizedSwapChain( ID3D10Device* pd3dDevice, IDXGISwapChain* pSwapChain,

const DXGI_SURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

HRESULT hr;

V_RETURN( g_DialogResourceManager.OnD3D10ResizedSwapChain( pd3dDevice, pBackBufferSurfaceDesc ) );

V_RETURN( g_D3DSettingsDlg.OnD3D10ResizedSwapChain( pd3dDevice, pBackBufferSurfaceDesc ) );

…

g_HUD.SetLocation( pBackBufferSurfaceDesc->Width - 170, 0 );

g_HUD.SetSize( 170, 170 );

g_SampleUI.SetLocation( pBackBufferSurfaceDesc->Width - 170, pBackBufferSurfaceDesc->Height - 300 );

g_SampleUI.SetSize( 170, 300 );

return S_OK;

}

HRESULT CALLBACK OnD3D9ResetDevice( IDirect3DDevice9* pd3dDevice,

const D3DSURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

HRESULT hr;

//hud

V_RETURN( g_DialogResourceManager.OnD3D9ResetDevice() );

V_RETURN( g_D3DSettingsDlg.OnD3D9ResetDevice() );

…

//ui

g_HUD.SetLocation( pBackBufferSurfaceDesc->Width - 170, 0 );

g_HUD.SetSize( 170, 170 );

g_SampleUI.SetLocation( 0, 0 );

g_SampleUI.SetSize( pBackBufferSurfaceDesc->Width, pBackBufferSurfaceDesc->Height );

g_SampleUI.GetControl( IDC_ANIMSPEED_STATIC )->SetSize( pBackBufferSurfaceDesc->Width, pBackBufferSurfaceDesc->Height * 6 /

10 );

BOOL bFullscreen = FALSE;

bFullscreen = !DXUTIsWindowed();

if (bFullscreen==FALSE)

g_SampleUI.GetControl( IDC_ANIMSPEED_STATIC )->SetLocation( pBackBufferSurfaceDesc->Width/2-5*15, pBackBufferSurfaceDesc->Height-15*26);

else

g_SampleUI.GetControl( IDC_ANIMSPEED_STATIC )->SetLocation( pBackBufferSurfaceDesc->Width/2-5*15, pBackBufferSurfaceDesc->Height-15*30);

g_SampleUI.GetControl( IDC_ANIMSPEED_SCALE )->SetLocation( pBackBufferSurfaceDesc->Width - 120, pBackBufferSurfaceDesc->Height-15*13);

…

}

We call into the g_DialogResourceManager and the g_D3DSettingsDlg. And we update all the individual controls with the back buffer information.

In OnFrameRender:

void CALLBACK OnD3D10FrameRender( ID3D10Device* pd3dDevice, double fTime, float fElapsedTime, void* pUserContext )

{

// Clear render target and the depth stencil

float ClearColor[4] = { 0.176f, 0.196f, 0.667f, 0.0f };

pd3dDevice->ClearRenderTargetView( DXUTGetD3D10RenderTargetView(), ClearColor );

pd3dDevice->ClearDepthStencilView( DXUTGetD3D10DepthStencilView(), D3D10_CLEAR_DEPTH, 1.0, 0 );

// If the settings dialog is being shown, then

// render it instead of rendering the app's scene

if( g_D3DSettingsDlg.IsActive() )

{

g_D3DSettingsDlg.OnRender( fElapsedTime );

return;

}

…

//

// Render the UI

//

if ( g_bShowUI )

{

g_HUD.OnRender( fElapsedTime );

g_SampleUI.OnRender( fElapsedTime );

}

//render stats

RenderText10();

}

void CALLBACK OnD3D9FrameRender( IDirect3DDevice9* pd3dDevice, double fTime, float fElapsedTime, void* pUserContext )

{

HRESULT hr;

if( g_D3DSettingsDlg.IsActive() )

{

g_D3DSettingsDlg.OnRender( fElapsedTime );

return;

}

…

//HUD

RenderText();

//ui

if ( g_bShowUI )

{

V( g_HUD.OnRender( fElapsedTime ) );

V( g_SampleUI.OnRender( fElapsedTime ) );

}

…

}

We check whether the g_D3DSettingsDlg. is active and if so render it. If not we render the 3D UI by calling the g_HUD and g_SampleUI OnRender methods.

In OnLostDevice:

void CALLBACK OnD3D10ReleasingSwapChain( void* pUserContext )

{

g_DialogResourceManager.OnD3D10ReleasingSwapChain();

}

void CALLBACK OnD3D9LostDevice( void* pUserContext )

{

//HUD

g_DialogResourceManager.OnD3D9LostDevice();

g_D3DSettingsDlg.OnD3D9LostDevice();

…

}

We release g_DialogResourceManager resources. In D3D9 we have to poke the g_D3DSettingsDlg object and let it manage its device dependent resources.

In OnDestroyDevice:

void CALLBACK OnD3D10DestroyDevice( void* pUserContext )

{

//HUD

g_DialogResourceManager.OnD3D10DestroyDevice();

g_D3DSettingsDlg.OnD3D10DestroyDevice();

DXUTGetGlobalResourceCache().OnDestroyDevice();

…

}

void CALLBACK OnD3D9DestroyDevice( void* pUserContext )

{

g_DialogResourceManager.OnD3D9DestroyDevice();

g_D3DSettingsDlg.OnD3D9DestroyDevice();

…

}

void CALLBACK OnD3D10DestroyDevice( void* pUserContext )

{

//HUD

g_DialogResourceManager.OnD3D10DestroyDevice();

g_D3DSettingsDlg.OnD3D10DestroyDevice();

DXUTGetGlobalResourceCache().OnDestroyDevice();

…

}

We destroy g_DialogResourceManager resources. In D3D9 we have to destroy the device objects for the g_D3DSettingsDlg object and destroy the DXUTGetGlobalResourceCache device objects.

Then in MsgProc:

LRESULT CALLBACK MsgProc( HWND hWnd, UINT uMsg, WPARAM wParam, LPARAM lParam,

bool* pbNoFurtherProcessing, void* pUserContext )

{

// Pass messages to dialog resource manager calls

// so GUI state is updated correctly

*pbNoFurtherProcessing = g_DialogResourceManager.MsgProc( hWnd, uMsg, wParam, lParam );

if( *pbNoFurtherProcessing )

return 0;

// Pass messages to settings dialog if its active

if( g_D3DSettingsDlg.IsActive() )

{

g_D3DSettingsDlg.MsgProc( hWnd, uMsg, wParam, lParam );

return 0;

}

// Give the dialogs a chance to handle the message first

*pbNoFurtherProcessing = g_HUD.MsgProc( hWnd, uMsg, wParam, lParam );

if( *pbNoFurtherProcessing )

return 0;

*pbNoFurtherProcessing = g_SampleUI.MsgProc( hWnd, uMsg, wParam, lParam );

if( *pbNoFurtherProcessing )

return 0;

…

}

Note variable g_fModelAnimSpeed. We use this in the skinned mesh animation calls to update the animation clock:

void CALLBACK CSkinnedMesh10::OnFrameMove( D3DXVECTOR3 vSelfPos,

double fTime, float fElapsedTime )

{

D3DXMATRIX mIdentity;

D3DXMatrixIdentity( &mIdentity );

g_SkinnedMesh10.TransformMesh( &mIdentity, fTime/g_fModelAnimSpeed );

}

void CALLBACK CSkinnedMesh9::OnFrameMove( D3DXVECTOR3 vSelfPos,

double fTime, float fElapsedTime )

{

…

if( g_pAnimController != NULL )

g_pAnimController->AdvanceTime( fElapsedTime/g_fModelAnimSpeed, NULL );

UpdateFrameMatrices( g_pFrameRoot, &mWorld);

}

And use the divisor to control the animation rate. The range of the control is 0.1 to 10. So we go from 10x slower to 10x faster. Normally the time tick that DXUT provides is what drives the animation clock. This is probably incorrect. We probably want a separate tick, at 30 or 60 ticks a second, to drive the animation at a standard rate independent of the frame rate. And then use the divisor there to slow down or speed up the animation clock.

For now using the simple divisor given by the slider variable let’s us fiddle with it even if it isnt correct. Perhaps in a future article when we have more to animate and want to be closer to correct I will explore clocks and a clock system to drive differing animations at differing speeds.

App Callbacks

We’ll need to add handling to several App callbacks.

In OnCreateDevice:

HRESULT CALLBACK OnD3D10CreateDevice( ID3D10Device* pd3dDevice, const DXGI_SURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

//3d hud

V_RETURN( g_DialogResourceManager.OnD3D10CreateDevice( pd3dDevice ) );

V_RETURN( g_D3DSettingsDlg.OnD3D10CreateDevice( pd3dDevice ) );

…

}

HRESULT CALLBACK OnD3D9CreateDevice( IDirect3DDevice9* pd3dDevice, const D3DSURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

HRESULT hr;

//ui

V_RETURN( g_DialogResourceManager.OnD3D9CreateDevice( pd3dDevice ) );

V_RETURN( g_D3DSettingsDlg.OnD3D9CreateDevice( pd3dDevice ) );

…

}

We call into the g_DialogResourceManager and the g_D3DSettingsDlg to update the objects with the device and create any device dependent objects.

In OnResetDevice:

HRESULT CALLBACK OnD3D10ResizedSwapChain( ID3D10Device* pd3dDevice, IDXGISwapChain* pSwapChain,

const DXGI_SURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

HRESULT hr;

V_RETURN( g_DialogResourceManager.OnD3D10ResizedSwapChain( pd3dDevice, pBackBufferSurfaceDesc ) );

V_RETURN( g_D3DSettingsDlg.OnD3D10ResizedSwapChain( pd3dDevice, pBackBufferSurfaceDesc ) );

…

g_HUD.SetLocation( pBackBufferSurfaceDesc->Width - 170, 0 );

g_HUD.SetSize( 170, 170 );

g_SampleUI.SetLocation( pBackBufferSurfaceDesc->Width - 170, pBackBufferSurfaceDesc->Height - 300 );

g_SampleUI.SetSize( 170, 300 );

return S_OK;

}

HRESULT CALLBACK OnD3D9ResetDevice( IDirect3DDevice9* pd3dDevice,

const D3DSURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

HRESULT hr;

//hud

V_RETURN( g_DialogResourceManager.OnD3D9ResetDevice() );

V_RETURN( g_D3DSettingsDlg.OnD3D9ResetDevice() );

…

//ui

g_HUD.SetLocation( pBackBufferSurfaceDesc->Width - 170, 0 );

g_HUD.SetSize( 170, 170 );

g_SampleUI.SetLocation( 0, 0 );

g_SampleUI.SetSize( pBackBufferSurfaceDesc->Width, pBackBufferSurfaceDesc->Height );

g_SampleUI.GetControl( IDC_ANIMSPEED_STATIC )->SetSize( pBackBufferSurfaceDesc->Width, pBackBufferSurfaceDesc->Height * 6 /

10 );

BOOL bFullscreen = FALSE;

bFullscreen = !DXUTIsWindowed();

if (bFullscreen==FALSE)

g_SampleUI.GetControl( IDC_ANIMSPEED_STATIC )->SetLocation( pBackBufferSurfaceDesc->Width/2-5*15, pBackBufferSurfaceDesc->Height-15*26);

else

g_SampleUI.GetControl( IDC_ANIMSPEED_STATIC )->SetLocation( pBackBufferSurfaceDesc->Width/2-5*15, pBackBufferSurfaceDesc->Height-15*30);

g_SampleUI.GetControl( IDC_ANIMSPEED_SCALE )->SetLocation( pBackBufferSurfaceDesc->Width - 120, pBackBufferSurfaceDesc->Height-15*13);

…

}

We call into the g_DialogResourceManager and the g_D3DSettingsDlg. And we update all the individual controls with the back buffer information.

In OnFrameRender:

void CALLBACK OnD3D10FrameRender( ID3D10Device* pd3dDevice, double fTime, float fElapsedTime, void* pUserContext )

{

// Clear render target and the depth stencil

float ClearColor[4] = { 0.176f, 0.196f, 0.667f, 0.0f };

pd3dDevice->ClearRenderTargetView( DXUTGetD3D10RenderTargetView(), ClearColor );

pd3dDevice->ClearDepthStencilView( DXUTGetD3D10DepthStencilView(), D3D10_CLEAR_DEPTH, 1.0, 0 );

// If the settings dialog is being shown, then

// render it instead of rendering the app's scene

if( g_D3DSettingsDlg.IsActive() )

{

g_D3DSettingsDlg.OnRender( fElapsedTime );

return;

}

…

//

// Render the UI

//

if ( g_bShowUI )

{

g_HUD.OnRender( fElapsedTime );

g_SampleUI.OnRender( fElapsedTime );

}

//render stats

RenderText10();

}

void CALLBACK OnD3D9FrameRender( IDirect3DDevice9* pd3dDevice, double fTime, float fElapsedTime, void* pUserContext )

{

HRESULT hr;

if( g_D3DSettingsDlg.IsActive() )

{

g_D3DSettingsDlg.OnRender( fElapsedTime );

return;

}

…

//HUD

RenderText();

//ui

if ( g_bShowUI )

{

V( g_HUD.OnRender( fElapsedTime ) );

V( g_SampleUI.OnRender( fElapsedTime ) );

}

…

}

We check whether the g_D3DSettingsDlg. is active and if so render it. If not we render the 3D UI by calling the g_HUD and g_SampleUI OnRender methods.

In OnLostDevice:

void CALLBACK OnD3D10ReleasingSwapChain( void* pUserContext )

{

g_DialogResourceManager.OnD3D10ReleasingSwapChain();

}

void CALLBACK OnD3D9LostDevice( void* pUserContext )

{

//HUD

g_DialogResourceManager.OnD3D9LostDevice();

g_D3DSettingsDlg.OnD3D9LostDevice();

…

}

We release g_DialogResourceManager resources. In D3D9 we have to poke the g_D3DSettingsDlg object and let it manage its device dependent resources.

In OnDestroyDevice:

void CALLBACK OnD3D10DestroyDevice( void* pUserContext )

{

//HUD

g_DialogResourceManager.OnD3D10DestroyDevice();

g_D3DSettingsDlg.OnD3D10DestroyDevice();

DXUTGetGlobalResourceCache().OnDestroyDevice();

…

}

void CALLBACK OnD3D9DestroyDevice( void* pUserContext )

{

g_DialogResourceManager.OnD3D9DestroyDevice();

g_D3DSettingsDlg.OnD3D9DestroyDevice();

…

}

void CALLBACK OnD3D10DestroyDevice( void* pUserContext )

{

//HUD

g_DialogResourceManager.OnD3D10DestroyDevice();

g_D3DSettingsDlg.OnD3D10DestroyDevice();

DXUTGetGlobalResourceCache().OnDestroyDevice();

…

}

We destroy g_DialogResourceManager resources. In D3D9 we have to destroy the device objects for the g_D3DSettingsDlg object and destroy the DXUTGetGlobalResourceCache device objects.

Then in MsgProc:

LRESULT CALLBACK MsgProc( HWND hWnd, UINT uMsg, WPARAM wParam, LPARAM lParam,

bool* pbNoFurtherProcessing, void* pUserContext )

{

// Pass messages to dialog resource manager calls

// so GUI state is updated correctly

*pbNoFurtherProcessing = g_DialogResourceManager.MsgProc( hWnd, uMsg, wParam, lParam );

if( *pbNoFurtherProcessing )

return 0;

// Pass messages to settings dialog if its active

if( g_D3DSettingsDlg.IsActive() )

{

g_D3DSettingsDlg.MsgProc( hWnd, uMsg, wParam, lParam );

return 0;

}

// Give the dialogs a chance to handle the message first

*pbNoFurtherProcessing = g_HUD.MsgProc( hWnd, uMsg, wParam, lParam );

if( *pbNoFurtherProcessing )

return 0;

*pbNoFurtherProcessing = g_SampleUI.MsgProc( hWnd, uMsg, wParam, lParam );

if( *pbNoFurtherProcessing )

return 0;

…

}

We call the MsgProc for the g_DialogResourceManager,the g_D3DSettingsDlg, the g_HUD, and the g_SampleUI to allow them to handle UI events like mouse clicks and keyboard presses.

With that we are done with the UI handling!

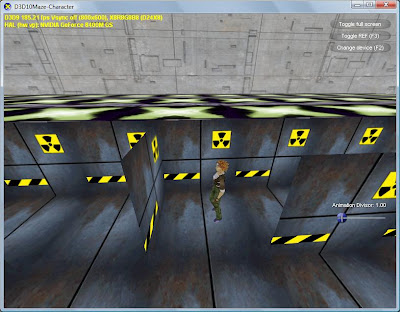

UI Screenshot

Figure 3:UI controls

By default, the UI isn’t shown. Hit the F1 key, though, and there you have it as in Figure 3. Try using the slider to speed up and slow down the animated rendering. To me, the default speed seems a bit quick and the character appears to “slide” on the ground. Adding a divisor to reduce the speed to about .8 of the default looks better to me. YMMV.

Conclusion

This sample builds on the previous ones. Now we have a skybox rendering, a maze generating and rendering, a simple solver for the maze, camera controls, skinned characters, and UI controls.

Animated characters are a must for a modern game title, and this sample shows how to use the D3D APIs to manipulate skinned character data and generate one. Two things to note:

1) the Soldier texture data is all 1k x1k=4mb textures in the DX SDK, it is not clear from the rendering quality that paying that cost is worth it. So I am providing 256x256=256k textures. I don’t notice the quality.

2) I think having both the cell floor and the animated character be different so you know what API you are seeing. If the difference bothers you, re-implementing Tiny for D3D10 is an obvious step.

Next up in the series are:

· additional maze rendering features, including a simulated texture load,

· generating a new maze with new start/stop locations when the current one is fully solved, and

· rendering an animated flag for the start and stop or “solved” positions in the maze, let’s call them the goals.

After that, who knows? Perhaps some optimization articles, perhaps rendering some objects in the rooms (cells) in the maze, and perhaps some new style rendering once I can talk about it. Stay tuned!

Posts

Posts

0 comments: