Monday, December 15, 2008

News Alert: Intel Visual Computing Summit

You can see from the agenda that this was a serious event, with on-target topics and high-level speakers.

What you cannot see from the agenda is that the attendees were also high-level. By this I mean decision makers; CEOs, CTOs, VP’s of Business Development, VPs of Development, senior level architects and engineers, a great crew.

At DreamWorks we got to see clips from the forthcoming “Monsters vs Aliens” in 3D as well as hear Jeffrey Katzenberg talk about storytelling and technology. It’s no secret that DreamWorks is investing in 3D movies. I helped get stereo support in DirectX7, so in general I am a proponent of advanced features like this. But how effective this new generation of stereo is, it has really come a long way. And having the work be done on the production side, instead of only as a post-processing step like back in DirectX7, really frees the films designers to leverage the technology. It looks like DreamWorks has a solid handle on the distribution side too, so this may be a way to bring life back to the theater experience. The studio’s campus is way cool too.

Thursday was the 1st day of sessions, kicked off by Renee James. This is the 2nd time I have seen her speak, and she delivered a really strong opening to the summit. Then it was off to breakout sessions and featured presenters. These were so high-quality I wished I could have attended all of them, but let me give you the flavor of a few of them.

Chas Boyd, D3D Architect, had a breakout session where he talked about D3D11 and how Compute Shaders unlock more potential in the GPU. If it isn’t clear yet, Intel intends Larrabee to be a great D3D device, so developers who don’t want to take special advantage of Larrabee features will still get to see their titles run. And its no secret that there are two other modes to use to program Larrabee:

1) a hybrid or mixed mode where D3D is used as much as the developer wants and only specific features are moved to Larrabee native

2) full-on native where the developer moves everything to Larrabee native

Gabe Newell gave a breakout session on Steam, and how the gaming and customer mix has changed over time and how Steam data helps him make decisions.

Will Wright gave a great talk even though he deviated from his original topics. Even with the great content in the other talks, this may have been the high-water mark of the conference. Will is just flat out an engaging speaker and it was a pleasure to be there for this talk.

The Havok breakout session by David Coghlan was also interesting, and it is clear Larrabee is about more than just graphics.

Thursday night we all played poker, at an event managed by the World Poker Tour. I suck at poker, but it was a great time and felt like the real deal. The last table was a treat; they were up on stage and being filmed and professionally announced for the entire time. As a consolation prize, I picked up a hat for my brother who is serious about poker. And the hand-rolled cigars were great.

Friday Pat Gelsinger talked about how serious Intel is about the Larrabee hardware project and what the long-term goals are. And then Elliot Garbus talked about the Larrabee software side and what Intel is doing to support Larrabee and developers who adopt it.

Doug Carmean, Intel Fellow and Larrabee Chief Architect, had a breakout session where he talked about the hardware architecture, with more details on the hardware than we gave out at SIGGRAPH 2008; since this was an RS-NDA event.

Steve Junkins, Principal Engineer and Larrabee Software Architect, had a breakout session where he talked about the hybrid and native software programming model to give attendees a feel for developing on Larrabee.

And the Project Offset team gave the last session of the show with a discussion about what the Offset engine is doing with Larrabee and a demo. Offset should be the perfect showcase title for Larrabee.

And Renee book-ended her opening talk with closing remarks.

All in all this was quite a roster of speakers and demos. The atmosphere was intense and the discussions in the hallways and the bars afterwards were electric. It was exciting to talk architecture and possibilities with a wide range of industry people; I have to say this was one of the best events I have attended in the last 5 years.

And I believe it went above and beyond the goals of impressing the audience and through them the industry that:

1) Intel gets PC gaming and is a great partner for PC gaming ISVs,

2) Intel is deadly serious about Larrabee and the Larrabee architecture and it is going to change the face of PC computing with Larrabee.

Now back to the programming articles.

Saturday, December 13, 2008

Maze Sample : Article 3 - D3D10Maze-Skybox

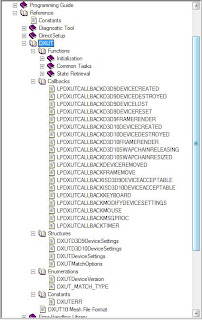

I forgot to mention one more important source of information on DXUT, and that is the reference section in the SDK Programming Guide. Image 1 below shows a view of the DX SDK Documentation Table of Contents to help you find it:

This provides definitions for the Functions (Initialization, Common Tasks, State Retrieval), the Callbacks, the Structures, the Enumerations, the Constants, and the SDK Mesh file format. This still doesn’t fully document what is available, but it does get us a major step in that direction. For instance the DXUTGetFrameStats and DXUTGetDeviceStats methods are defined under Functions->State Retreival.

Now we know there are 3 areas we can find information on DXUT.

Application Policy

Now that we have the gist of DXUT and the Base application under control, it’s now time to do something useful.

First, let’s determine a couple of policies.

1) Framework

We have already decided to use DXUT.

2) Models

We will use .x files where we can and .sdkmesh where we are forced to.

3) Rendering Style

We are going to use HLSL and Effect files to render all objects.

4) Media

We are going to use separate model and effect files for the D3D9 and D3D10 code paths. This will allow us to modify them appropriately for each code path, for instance to use SM 2.0 and SM 4.0 as appropriate.

Now that we have that clear, let’s get ready to render!

Skyboxology

It’s one thing to render inside the maze. But what if we want to render outside the maze, looking down at it? What is outside the maze?

Auto-generating a skybox seems less than useful because in most cases games will be loading pre-existing models. Most games have their own custom format. We will re-use the SDK formats.

Unfortunately, the D3D10 SDK chose to move away from .x files, which had broad support in interchange and modeling tools , and instead “rolled their own” format, sdkmesh. Ours not to wonder why, to misquote the poet. This does complicate life, and does generate quite a bit of grumbling out on the forums and newsgroups and I am sure will generate some comments here.

It’s true it’s unfortunate. However, we will endeavor to work around this with a minimum of fuss and keep D3D9 and D3D10 rendering as similar as we can.

It pays to do your research. As I noted in the previous article, the NV SDK also uses DXUT. Interestingly enough, it publishes a file that the DX SDK used to publish, but current DX SDKs do not. This file is sdkmesh_old (both .cpp and .h). This is such a highly useful file I am quite surprised it was dropped. This file contains the code to load .x files and render them using D3D10. And it does so with class names that do not collide with the new sdkmesh loader class names. We will end up using both, so that is a good thing.

SDKMesh_old has two key classes we will use, CDXUTMesh10 and CDXUTMesh.Again, interestly enough, CDXUTMesh10 loads the files using D3D9 D3DX methods, extracts the vertex buffer, index buffer data,material data, and texture data and copies the data across to D3D10 equivalents. Given the shocking reduction in D3DX surface area between D3D9 and D3D10, that is about the best we can do unless we painstakingly recreated the missing D3DX methods. While that may be a worthy effort, that is certainly not a goal of this article series.

Skybox Application Project

So we are going to load a skybox .x file in both D3D9 and D3D10. Conveniently, the SDK provides us one. The ConfigSystem sample uses cell.x as one of the media files. This model looks like one is inside a big, futuristic hanger. The Millenium Falcon or any other spaceship would not look out of place. Yes, the Maze does look a bit out of place inside a hangar, but this is a decision of convenience and not appropriateness. And we could always muck with the textures.

Given the reliance on D3D0 in the sdkmesh_old code; in this sample article I will start with the D3D9 implementation.

D3D9 Code Path – Globals

We’ll use the following globals for the basic cameras, the skybox vertex, and the D3D9 resources:

//maze camera

extern BOOL g_bSelfCam;

extern BOOL g_bSkyCam;

extern CModelViewerCamera g_SkyCamera; // A model viewing camera

extern CMazeFirstPersonCamera g_SelfCamera; // A fp camera

//skybox

struct SKYBOX_VERTEX

{

D3DXVECTOR3 pos;

D3DXVECTOR3 normal;

D3DXVECTOR2 tex;

};

ID3DXEffect* g_pEffectSkybox9;

D3DXHANDLE g_hSkyboxTech; // Technique to use

D3DXHANDLE g_hSkyboxTex;

CDXUTXFileMesh g_SkyboxMesh; // Object to use for skybox

D3DXHANDLE g_hmSkyboxWorldViewProjHandle9;

DXUT defines a couple of camera types for us, a “model viewer” camera that moves around a model that is “planted” at a static viewer location, and a “first person shooter” camera that can be used to roam around the world. We’ll use an FPS-style camera for the “self” position where we navigate and solve the maze, and a model viewer style camera for a remote viewing camera that will sit above the maze.

The model viewer camera we’ll call the sky camera and use variable g_SkyCamera of type CModelViewerCamera to manipulate it.

And we’ll define a “first person shooter” camera using variable g_SelfCamera of type CMazeFirstPersonCamera that will be used at the maze navigation point when we code up the “solver”.

Next we define a very simple vertex type SKYBOX_VERTEX with a position, a normal, and texture coordinates for the skybox.

Then we declare a variable to hold our pointer to our effect, g_pEffectSkybox9,along with a couple of handles to allow us to access the technique and the skybox texture.

Then we declare a CDXUTFileMesh object to contain the skybox and a handle to allow us to access the combined world-view-projection matrix used to position and render the skybox.

D3D9 Code Path – Callbacks

We again have the same set of callbacks in the D3D9 code path that handle creation, destruction, rendering, animation, and input. I’ll describe only the new bits, and you can refer to the previous article for the rest of the method if you cannot recall what is going on.

OnD3D9CreateDevice creates all the long-lived resources we need, basically D3DPOOL_MANAGED resources. The implementation is as follows:

// Create any D3D9 resources that will live through a reset

// and aren't tied to the back buffer size

//---------------------------------------------------------

HRESULT CALLBACK OnD3D9CreateDevice( IDirect3DDevice9* pd3dDevice, const D3DSURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

HRESULT hr;

V_RETURN( D3DXCreateFont( pd3dDevice, 15, 0, FW_BOLD, 1, FALSE,

DEFAULT_CHARSET,

OUT_DEFAULT_PRECIS, DEFAULT_QUALITY,

DEFAULT_PITCH FF_DONTCARE,

L"Arial", &g_pFont9 ) );

WCHAR str[MAX_PATH];

// Initialize the skybox mesh

V_RETURN( DXUTFindDXSDKMediaFileCch(str, MAX_PATH, L"media\\cell9.x" ));

if( FAILED( g_SkyboxMesh.Create( pd3dDevice, str ) ) )

return DXUTERR_MEDIANOTFOUND;

DWORD dwShaderFlags = D3DXFX_NOT_CLONEABLE;

#if defined( DEBUG ) defined( _DEBUG )

// Set the D3DXSHADER_DEBUG flag to embed debug information in the shaders.

// Setting this flag improves the shader debugging experience, but still allows

// the shaders to be optimized and to run exactly the way they will run in

// the release configuration of this program.

dwShaderFlags = D3DXSHADER_DEBUG;

#endif

#ifdef DEBUG_VS

dwShaderFlags = D3DXSHADER_FORCE_VS_SOFTWARE_NOOPT;

#endif

#ifdef DEBUG_PS

dwShaderFlags = D3DXSHADER_FORCE_PS_SOFTWARE_NOOPT;

#endif

// Read the skybox D3DX effect file

V_RETURN( DXUTFindDXSDKMediaFileCch( str, MAX_PATH,

// If this fails, there should be debug output as to

// they the .fx file failed to compile

V_RETURN( D3DXCreateEffectFromFile( pd3dDevice, str, NULL, NULL,

dwShaderFlags,

NULL, &g_pEffectSkybox9, NULL ) );

// Obtain the parameter handles

g_hSkyboxTech = g_pEffectSkybox9->GetTechniqueByName( "RenderSkybox" );

g_hSkyboxTex = g_pEffectSkybox9->GetParameterByName( NULL,

"g_txDiffuse");

g_hmSkyboxWorldViewProjHandle9 = g_pEffectSkybox9->GetParameterByName(

NULL,

"g_mWorldViewProj" );

// Setup the sky camera's view parameters

D3DXVECTOR3 vecEye(0.0f, 4.0f, 0.0f);

D3DXVECTOR3 vecAt (15, 0, 15.0);

g_SkyCamera.SetViewParams(&vecEye, &vecAt);

return S_OK;

}

Here we locate the .x file ( cell9.x ) using DXUTFindDXSDKMediaFileCch. Its stored in the media subfolder then we create a mesh using the CDXUTFileMesh method Create using g_SkyboxMesh.Create.

Then we locate the .fx file ( skybox9.fx ) using the same DXUTFindDXSDKMediaFileCch method. We load the effect file using D3DXCreateEffectFromFile and then create our technique using g_pEffectSkybox9->GetTechniqueByName and create our handles using g_pEffectSkybox9->GetParameterByName.

Note the pattern here, “locate a resource, create it”.

Next OnD3D9ResetDevice we create resources that wont persist past a reset, basically D3DPOOL_DEFAULT resources. The implementation is as follows:

// Create any D3D9 resources that won't live through reset

// or that are tied to the back buffer size

HRESULT CALLBACK OnD3D9ResetDevice( IDirect3DDevice9* pd3dDevice,

const D3DSURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

HRESULT hr;

//hud

if( g_pFont9 ) V_RETURN( g_pFont9->OnResetDevice() );

V_RETURN( D3DXCreateSprite( pd3dDevice, &g_pSprite9 ) );

g_pTxtHelper = new CDXUTTextHelper( g_pFont9, g_pSprite9, NULL, NULL,

15 );

//skybox

V_RETURN( g_SkyboxMesh.RestoreDeviceObjects( pd3dDevice ) );

if( g_pEffectSkybox9 ) V_RETURN( g_pEffectSkybox9->OnResetDevice() );

//cameras

float fAspectRatio = pBackBufferSurfaceDesc->Width /

(FLOAT) pBackBufferSurfaceDesc->Height;

g_SkyCamera.SetProjParams( D3DX_PI / 2, fAspectRatio, 0.1f, 5000.0f );

g_SkyCamera.SetWindow( pBackBufferSurfaceDesc->Width,

pBackBufferSurfaceDesc->Height );

g_SkyCamera.SetButtonMasks( 0, MOUSE_WHEEL,

g_SelfCamera.SetProjParams( D3DX_PI / 2, fAspectRatio, 0.1f, 5000.0f );

D3DXVECTOR3 vecEye(0.0f, 0.5f, 0.0f);

D3DXVECTOR3 vecAt (1.0f, 0.5f, 1.0f);

g_SelfCamera.SetViewParams( &vecEye, &vecAt );

return S_OK;

}

Here we restored any device dependent objects in the mesh by invoking g_SkyboxMesh.RestoreDeviceObjects.

We do the same for the effect using g_pEffectSkybox9->OnResetDevice.

And then we reset our cameras based on the backbuffer width and height using SetProjParams. In the case of the sky camera, we also told it the window size using. In addition, this camerat responds to the mouse so we called SetButtonMasks to tell the camera class which buttons it should accept user input from.

Last for this method the we reset the view for the self camera using SetViewParams.

Next in OnD3D9LostDevice we handle D3DPOOL_DEFAULT style resources. The implementation is:

//---------------------------------------------------------

// Release D3D9 resources created in OnD3D9ResetDevice

//---------------------------------------------------------

{

//HUD

if( g_pFont9 ) g_pFont9->OnLostDevice();

SAFE_RELEASE( g_pSprite9 );

SAFE_DELETE( g_pTxtHelper );

//skybox

g_SkyboxMesh.InvalidateDeviceObjects();

if( g_pEffectSkybox9 ) g_pEffectSkybox9->OnLostDevice();

}

Here we release the resources for the mesh and the effect using g_SkyboxMesh.InvalidateDeviceObjects and

Next in OnD3D9DestroyDevice we deal with D3DPOOL_MANAGED resources that need to be cleaned up. The implementation is as follows:

//---------------------------------------------------------

//---------------------------------------------------------

void CALLBACK OnD3D9DestroyDevice( void* pUserContext )

{

//HUD

SAFE_RELEASE( g_pFont9 );

//d3d skybox

g_SkyboxMesh.Destroy();

SAFE_RELEASE( g_pEffectSkybox9 );

}

Here we destroy the mesh using g_SkyboxMesh.Destroy and delete the effect using SAFE_RELEASE( g_pEffectSkybox9 ) .

Next in OnD3D9FrameRender we render the skybox.

//---------------------------------------------------------

// Render the scene using the D3D9 device

//---------------------------------------------------------

void CALLBACK OnD3D9FrameRender( IDirect3DDevice9* pd3dDevice,

double fTime, float fElapsedTime,

void* pUserContext )

{

HRESULT hr;

// Clear the render target and the zbuffer

V( pd3dDevice->Clear( 0, NULL, D3DCLEAR_TARGET D3DCLEAR_ZBUFFER,

D3DCOLOR_ARGB( 0, 45, 50, 170 ),

1.0f, 0 ) );

// Render the scene

if( SUCCEEDED( pd3dDevice->BeginScene() ) )

{

//camera matrices

D3DXMATRIX mWorldView;

D3DXMATRIX mWorldViewProj;

D3DXMATRIX mViewSkybox;

D3DXMATRIX mView;

D3DXMATRIX mProj;

//proj

mProj = *g_SkyCamera.GetProjMatrix();

//view

if ( g_bSelfCam )

{

mView = g_SelfCamera.Render9(pd3dDevice);

mViewSkybox = mView;

}

else if ( g_bSkyCam )

{

mView = *g_SkyCamera.GetViewMatrix();

mViewSkybox = mView;

}

//scale and pos the skybox

D3DXMATRIX mSkyScale, mSkyPos;

D3DXMatrixTranslation( &mSkyPos, 1.0f, 3.0f, 1.5f );

D3DXMatrixScaling( &mSkyScale, 8.0f, 4.0f, 6.0f );

D3DXMatrixMultiply( &mWorldViewProj, &mViewSkybox, &mProj );

D3DXMatrixMultiply( &mWorldViewProj, &mSkyScale,&mWorldViewProj);

D3DXMatrixMultiply( &mWorldViewProj, &mSkyPos, &mWorldViewProj);

// Render the skybox

UINT cPass;

pd3dDevice->SetStreamSource( 0, g_SkyboxMesh.m_pVB, 0,

sizeof(SKYBOX_VERTEX) );

pd3dDevice->SetVertexDeclaration( g_SkyboxMesh.m_pDecl );

V( g_pEffectSkybox9->SetMatrix( g_hmSkyboxWorldViewProjHandle9,

&mWorldViewProj) );

g_SkyboxMesh.Render(g_pEffectSkybox9,g_hSkyboxTex,NULL,NULL,

NULL,NULL,NULL,true,true);

//HUD

RenderText();

V( pd3dDevice->EndScene() );

}

}

Here we perform some work with the cameras, calling g_SelfCamera.Render9 for the modelviewer camera to help us construct the view matrix, and g_SkyCamera.GetViewMatrix for the FPS camera to help us construct the view matrix. With those in hand we scale and translate the .x file and use the view and projection matrix to construct a worldviewprojection matrix to render with. We then use the skybox vertex buffer and format using SetStreamSource and SetVertexDeclaration, respectively. And set the worldviewprojection matrix we constructing using g_pEffectSkybox9->SetMatrix and the effect handle g_hmSkyboxWorldViewProjHandle9 for it. Finally we invoke the render method in the mesh g_SkyboxMesh.Render and pass in the effect and texture handle.

Remember the framework has only one OnFrameMove method and it is device independent.

So that’s it for the D3D9 code path. On to D3D10!

D3D10 Code Path-Globals

The camera variables are the same, no need to cover those again.

The camera effect handles and skybox globals are significantly different.

//camera/view handles for effects

ID3D10EffectMatrixVariable* g_pmSkyboxWorldViewProj = NULL;

ID3D10EffectMatrixVariable* g_pmSkyboxWorldView = NULL;

// x file support

#include "sdkmesh_old.h"

CDXUTMesh10 g_SkyboxMesh;

//skybox effect

ID3D10Effect* g_pEffectSkybox = NULL;

ID3D10EffectTechnique* g_pRenderSkybox = NULL;

ID3D10InputLayout* g_pSkyboxInputLayout = NULL;

const D3D10_INPUT_ELEMENT_DESC SkyboxVertexLayout[] =

{

{ "POSITION",0, DXGI_FORMAT_R32G32B32_FLOAT,0, 0, D3D10_INPUT_PER_VERTEX_DATA, 0 },

{ "NORMAL", 0, DXGI_FORMAT_R32G32B32_FLOAT,0, 12, D3D10_INPUT_PER_VERTEX_DATA, 0 },

{ "TEXCOORD",0, DXGI_FORMAT_R32G32_FLOAT, 0, 24, D3D10_INPUT_PER_VERTEX_DATA, 0 },

};

struct SkyboxVertex

{

D3DXVECTOR3 pos;

D3DXVECTOR3 norm;

D3DXVECTOR2 tex;

};

Here you see D3D10 style effect and element definitions. And the inclusion of the sdkmesh_old.h header file.

This is pretty close to the D3D9 code, about as close as D3D10 will let us get.

D3D10 Code Path-Callbacks

We again have the same set of callbacks in the D3D10 code path that handle creation, destruction, rendering, animation, and input.

OnD3D10CreateDevice creates all the long-lived resources we need. The implementation is as follows:

//---------------------------------------------------------

// Create any D3D10 resources that aren't backbuf dependant

//---------------------------------------------------------

HRESULT CALLBACK OnD3D10CreateDevice( ID3D10Device* pd3dDevice,

const DXGI_SURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

HRESULT hr;

V_RETURN( D3DX10CreateFont( pd3dDevice, 15, 0, FW_BOLD, 1, FALSE,

DEFAULT_CHARSET,

OUT_DEFAULT_PRECIS, DEFAULT_QUALITY,

DEFAULT_PITCH FF_DONTCARE,

L"Arial", &g_pFont10 ) );

V_RETURN( D3DX10CreateSprite( pd3dDevice, 512, &g_pSprite10 ) );

g_pTxtHelper = new CDXUTTextHelper( NULL, NULL,

g_pFont10, g_pSprite10, 15 );

// prepare for effect files

D3D10_PASS_DESC PassDesc;

ID3D10EffectPass* pPass;

ID3D10Blob* l_p_Errors = NULL;

LPVOID l_pError = NULL;

WCHAR str[MAX_PATH];

DWORD dwShaderFlags = D3D10_SHADER_ENABLE_STRICTNESS;

#if defined( DEBUG ) defined( _DEBUG )

// Set the D3D10_SHADER_DEBUG flag to embed debug information in the shaders.

// Setting this flag improves the shader debugging experience, but still allows

// the shaders to be optimized and to run exactly the way they will run in

// the release configuration of this program.

dwShaderFlags = D3D10_SHADER_DEBUG;

#endif

// Read the skybox D3DX effect file

V_RETURN( DXUTFindDXSDKMediaFileCch( str, MAX_PATH,

L"effects\\skybox10.fx" ) );

V_RETURN( D3DX10CreateEffectFromFile( str,

NULL, NULL, "fx_4_0",

dwShaderFlags, 0, pd3dDevice, NULL,

NULL, &g_pEffectSkybox,

&l_p_Errors, NULL ));

if( l_p_Errors )

{

l_pError = l_p_Errors->GetBufferPointer();

// then cast to a char* to see it in the locals window

}

//technique

g_pRenderSkybox = g_pEffectSkybox->GetTechniqueByName("RenderSkybox");

// Obtain the parameter handles

g_pmSkyboxWorldViewProj = g_pEffectSkybox->GetVariableByName( "g_mWorldViewProj" )->AsMatrix();

g_pmSkyboxWorldView = g_pEffectSkybox->GetVariableByName(

//create the skybox layout

pPass = g_pRenderSkybox->GetPassByIndex( 0 );

pPass->GetDesc( &PassDesc );

V( pd3dDevice->CreateInputLayout( SkyboxVertexLayout,

PassDesc.pIAInputSignature,

PassDesc.IAInputSignatureSize,

&g_pSkyboxInputLayout ) );

//load the skybox mesh

V_RETURN(DXUTFindDXSDKMediaFileCch(str, MAX_PATH, L"media\\cell10.x"));

V_RETURN(g_SkyboxMesh.Create(pd3dDevice, str,

(D3D10_INPUT_ELEMENT_DESC*)SkyboxVertexLayout,

sizeof(SkyboxVertexLayout)/sizeof(D3D10_INPUT_ELEMENT_DESC)));

// Setup the sky camera's view parameters

D3DXVECTOR3 vecEye(0.0f, 4.0f, 0.0f);

D3DXVECTOR3 vecAt (15, 0, 15.0);

g_SkyCamera.SetViewParams(&vecEye, &vecAt);

return S_OK;

}

Here we see similarities and differences from D3D9 to D3D10. The same pattern of “locate, load” using DXUTFindDXSDKMediaFileCch, first with D3DX10CreateEffectFromFile for the effect file and then g_SkyboxMesh.Create with for the x file. Also notice the use of the l_p_Errors variable to enable viewing the error in the debugger.

The effect handle retrieving code is a bit different though. First the technique is retrieved using g_pEffectSkybox->GetTechniqueByName. Next two matrices are retrieved using g_pEffectSkybox->GetVariableByName( "xxx" )->AsMatrix(); for the two variables "g_mWorldViewProj" and "g_mWorldView".

And the setup code before loading the mesh is quite different though. And shows how it’s a bit easier to set up geometry when doing so with effects files. We retrieve the pass description information and use that to create the input layout with CreateInputLayout , loosely similar to a D3D9 vertex declaration. Then we use the layout in the g_SkyboxMesh.Create method. That’s a bit more formal than in D3D9, and this extra specificity in D3D10 is part of why it is more efficient as the driver has less to figure out.

The camera code is largely the same so I skip it here in the interests of brevity.

Next in , we do have a bit of code to cover. Here we deal with resources that have some dependency on the back buffer or do not persist past a resize/reset.

//---------------------------------------------------------

// Create any D3D10 resources that depend on the backbuffer

//---------------------------------------------------------

HRESULT CALLBACK OnD3D10ResizedSwapChain( ID3D10Device* pd3dDevice,

IDXGISwapChain* pSwapChain,

const DXGI_SURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

HRESULT hr;

// Setup the camera's projection parameters

float fAspectRatio = pBackBufferSurfaceDesc->Width /

( FLOAT )pBackBufferSurfaceDesc->Height;

//sky camera above the maze

g_SkyCamera.SetProjParams( D3DX_PI / 2, fAspectRatio, 0.1f, 5000.0f );

g_SkyCamera.SetWindow( pBackBufferSurfaceDesc->Width,

pBackBufferSurfaceDesc->Height );

g_SkyCamera.SetButtonMasks( 0, MOUSE_WHEEL,

MOUSE_RIGHT_BUTTON MOUSE_LEFT_BUTTON );

//self cam in the msze

g_SelfCamera.SetProjParams( D3DX_PI / 2, fAspectRatio, 0.1f, 5000.0f );

D3DXVECTOR3 vecEye(0.0f, 0.5f, 0.0f);

D3DXVECTOR3 vecAt (1.0f, 0.5f, 1.0f);

g_SelfCamera.SetViewParams( &vecEye, &vecAt );

return S_OK;

}

Here we again set up the cameras with their views and projections that depend on aspect ratios.

Next in OnD3D10ReleasingSwapChain the beauty of D3D10 is we have no resourc e handling to perform. The mesh and effect need no special handling. So I skip that code.

Next comes OnD3D10DestroyDevice, where the code is as follows:

//---------------------------------------------------------

// Release D3D10 resources created in OnD3D10CreateDevice

//---------------------------------------------------------

{

//HUD

SAFE_RELEASE( g_pFont10 );

SAFE_RELEASE( g_pSprite10 );

SAFE_DELETE( g_pTxtHelper );

//d3d skybox

g_SkyboxMesh.Destroy();

SAFE_RELEASE(g_pSkyboxInputLayout);

SAFE_RELEASE( g_pEffectSkybox );

}

Here we release the skybox mesh, input layout, and effect.

Next we look at OnD3D10FrameRender

//---------------------------------------------------------

// Render the scene using the D3D10 device

//---------------------------------------------------------

void CALLBACK OnD3D10FrameRender( ID3D10Device* pd3dDevice,

double fTime, float fElapsedTime,

void* pUserContext )

{

// Clear render target and the depth stencil

float ClearColor[4] = { 0.176f, 0.196f, 0.667f, 0.0f };

pd3dDevice->ClearRenderTargetView( DXUTGetD3D10RenderTargetView(),

ClearColor );

pd3dDevice->ClearDepthStencilView( DXUTGetD3D10DepthStencilView(),

D3D10_CLEAR_DEPTH, 1.0, 0 );

//camera matrices

D3DXMATRIX mWorldView;

D3DXMATRIX mWorldViewProj;

D3DXMATRIX mViewSkybox;

D3DXMATRIX mView;

D3DXMATRIX mProj;

//proj

mProj = *g_SkyCamera.GetProjMatrix();

//view

if ( g_bSelfCam )

{

mView = g_SelfCamera.Render10(pd3dDevice);

mViewSkybox = mView;

}

else if ( g_bSkyCam )

{

mView = *g_SkyCamera.GetViewMatrix();

mViewSkybox = mView;

}

//scale and pos the skybox

D3DXMATRIX mSkyScale, mSkyPos;

D3DXMatrixTranslation( &mSkyPos, 1.0f, 3.0f, 1.5f );

D3DXMatrixScaling( &mSkyScale, 8.0f, 4.0f, 6.0f );

D3DXMatrixMultiply( &mWorldViewProj, &mViewSkybox, &mProj );

D3DXMatrixMultiply( &mWorldViewProj, &mSkyScale, &mWorldViewProj );

D3DXMatrixMultiply( &mWorldViewProj, &mSkyPos, &mWorldViewProj );

// Render the skybox

g_pmSkyboxWorldViewProj->SetMatrix( ( float* )&mWorldViewProj );

g_pmSkyboxWorldView->SetMatrix( ( float* )&mView );

pd3dDevice->IASetInputLayout(g_pSkyboxInputLayout);

g_pRenderSkybox->GetPassByIndex(0)->Apply(0);

g_SkyboxMesh.Render(pd3dDevice);

//render stats

RenderText10();

}

Here we perform similar camera handling and similar matrix setup for scaling, translation, and world location. Setting the effect variables is simpler, using effecthandle->SetMatrix as in g_pmSkyboxWorldViewProj->SetMatrix and g_pmSkyboxWorldView->SetMatrix. Then we set the layout with IASetInputLayout, set the effect pass with g_pRenderSkybox->GetPassByIndex(0)->Apply(0) , and render with g_SkyboxMesh.Render.

Next in OnFrameMove we do a bit of work. Here we examine the sim state, and if we are running the sim we update the cameras. After that we handle some keypresses using GetAsyncKeyState to set global state variables.

//---------------------------------------------------------

// Handle updates to the scene.

//---------------------------------------------------------

void* pUserContext )

{

if ( g_bRunSim )

{

// Update the sky camera's position based on user input

g_SkyCamera.FrameMove( fElapsedTime );

//update the self camera's position based on the sim input

g_SelfCamera.FrameMove( fElapsedTime );

}

//handle key presses

if( GetAsyncKeyState( VK_SHIFT ) & 0x8000 )

{

}

else

{

if( GetAsyncKeyState( 'M' ) & 0x8000 )

{

g_bSelfCam = true;

g_bSkyCam = false;

}

if( GetAsyncKeyState( 'S' ) & 0x8000 )

{

g_bSelfCam = false;

g_bSkyCam = true;

}

if ( GetAsyncKeyState( 'P' ) & 0x8000 )

{

g_bRunSim = !g_bRunSim;

}

}

}

In this sample OnMouse and OnKeyboard are empty so I am skipping them to avoid the article getting too long.

Next MsgProc is as follows:

//---------------------------------------------------------

// Handle messages to the application

//---------------------------------------------------------

LRESULT CALLBACK MsgProc( HWND hWnd, UINT uMsg, WPARAM wParam, LPARAM lParam,

bool* pbNoFurtherProcessing, void* pUserContext )

{

// Pass all windows messages to camera so it can respond to user input

g_SkyCamera.HandleMessages( hWnd, uMsg, wParam, lParam );

return 0;

}

Here we invoke to let the modelviewer camera get a change to handle messages.

With that we are in the final stretch.

WinMain and Screenshots

WinMain here has no new bits, so I skip it here again in the interests of brevity as this article is already large.

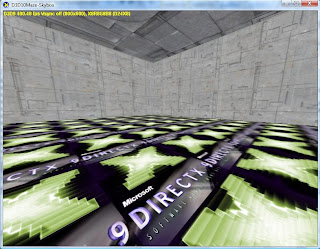

Here is the D3D9 version, snapped from the initial position of the sky camera.

Notice the use of the Direct3D9 Logo. We modified cell9.x to change the material that used "cellfloor.jpg" to now use "dx9_logo.bmp".

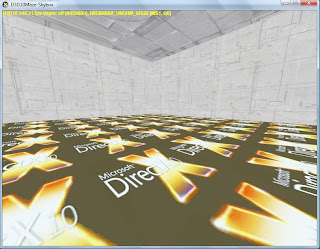

Here is the D3D10 version, snapped from the initial position of the sky camera.

Notice the use of the Direct3D10 Logo. We modified cell10.x to change the material that used "cellfloor.jpg" to now use "direct-x-10-256.bmp".

And just that easily we now have a set of customized models that reflect what API we are using. Sweet.

Here is a link to the download with the project, the source, and the media.

Conclusion

There you have it, an app that renders the “outside” so it looks like we are within a world, but with no real “inside” to navigate around. Yes, it is a bit empty in there, but the next article will begin adding the maze geometry. The maze geometry will include a floor, the walls, and a roof.

I basically hit my target of a week for this article, but the holidays are fast approaching so I do not want to over promise here. I will try to get the next article done before Christmas and then I think I will be taking a break until after the holiday when we will add navigation, animated characters, better camera controls, and a bit of texture load.

Stay tuned!

Saturday, December 6, 2008

Maze Sample : Article 2 - D3D10Maze-Base

More on DXUT

I want to spend another minute or two on DXUT, and list the files contained in a typical sample project and their basic purpose.

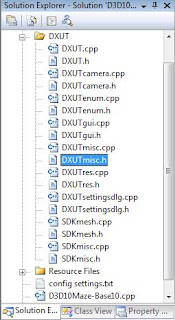

Image 1 below shows the DXUT subfolder in the Base project. These include DXUT, DXUTCamera, DXUTenum, DXUTgui, DXUTmisc, DXUTres, DXUTsettingsdlg, SDKmesh, and SDKmisc; .h header files and .cpp source files.

Here is a quick description of what the file pairs ( .cpp, .h ) do for us:

DXUT: callbacks, device settings, etc

DXcamera: arcball controller and cameras

DXUTenum: device enumeration

DXUTgui: 3D UI elements

DXUTmisc: controllers, timers, etc

DXUTres: create resources from memory

DXUTsettingsdlg: device settings dialog support

SDKmesh: support for “SDKMesh” files

SDKmisc: resource cache, fonts, helpers

The samples make heavy use of macro V_RETURN, defined in DXUT.h, which wraps checking an HRESULT for success and failure. I am assuming all readers are familiar with COM and SAFE_RELEASE and SAFE_DELETE.

Now that we have defined the DXUT Framework as our basis of discussion and have grounding in what it gives and where to look, let’s get started with what I am calling the project baseline.

Lets cover the D3D10 callback methods, then WinMain, then the D3D9 callback methods.

Base Application Project

Globals-D3D10

ID3DX10Font* g_pFont10 = NULL;

ID3DX10Sprite* g_pSprite10 = NULL;

CDXUTTextHelper* g_pTxtHelper = NULL;

Callbacks-D3D10

We initialize the UI elements required to support the frame rate counter in OnD3D10CreateDevice as follows:

//------------------------------------------------------------------

// Create D3D10 resources that aren't dependant on the back buffer

//------------------------------------------------------------------

HRESULT CALLBACK OnD3D10CreateDevice( ID3D10Device* pd3dDevice,

const DXGI_SURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

HRESULT hr;

V_RETURN( D3DX10CreateFont( pd3dDevice, 15, 0, FW_BOLD, 1, FALSE,

DEFAULT_CHARSET,

OUT_DEFAULT_PRECIS, DEFAULT_QUALITY,

DEFAULT_PITCH FF_DONTCARE,

L"Arial", &g_pFont10 ) );

V_RETURN( D3DX10CreateSprite( pd3dDevice, 512, &g_pSprite10 ) );

g_pTxtHelper = new CDXUTTextHelper( NULL, NULL, g_pFont10, g_pSprite10,

15 );

return S_OK;

}

That includes a font, a sprite or billboard to render the font on, and a TextHelper object that DXUT provides to ease dealing with text. CDXUTTextHelper is declared in SDKMisc.h and defined SDKMisc. Cpp.

We handle any device-lifetime objects in OnD3D10ResizedSwapChains with:

//------------------------------------------------------------------

// Create any D3D10 resources that depend on the back buffer

//------------------------------------------------------------------

HRESULT CALLBACK OnD3D10ResizedSwapChain(ID3D10Device* pd3dDevice,

IDXGISwapChain* pSwapChain,

const DXGI_SURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

HRESULT hr;

// Setup the camera's projection parameters

float fAspectRatio = pBackBufferSurfaceDesc->Width / ( FLOAT )pBackBufferSurfaceDesc->Height;

return S_OK;

}

For now, there is no code in OnFrameMove, it’s just a shell.

//------------------------------------------------------------------

// Handle updates to the scene regardless of which D3D API is used

//------------------------------------------------------------------

void CALLBACK OnFrameMove( double fTime, float fElapsedTime,

void* pUserContext )

{

}

That will change soon enough. And we should note the lack of a device parameter to OnFrameMove, the framework uses a single OnFrameMove for both D3D10 and D3D9 which has some implications I will cover later.

In OnD3D10FrameRender we render the frame rate using helper method RenderText10 which hides the use of the DXUTTextHelper object:

//------------------------------------------------------------------

// Render the help and statistics text

//------------------------------------------------------------------

void RenderText10()

{

g_pTxtHelper->Begin();

g_pTxtHelper->SetInsertionPos( 2, 0 );

g_pTxtHelper->SetForegroundColor( D3DXCOLOR( 1.0f, 1.0f, 0.0f, 1.0f ) );

g_pTxtHelper->DrawTextLine( DXUTGetFrameStats( true ) );

g_pTxtHelper->DrawTextLine( DXUTGetDeviceStats( ) );

g_pTxtHelper->End();

}

Note the use of DXUTGetFrameStats and DXUTGetDeviceStats to retrieve and display the frame rate and device information. And OnD3D10FrameRender uses that as follows:

//------------------------------------------------------------------

// Render the scene using the D3D10 device

//------------------------------------------------------------------

void CALLBACK OnD3D10FrameRender( ID3D10Device* pd3dDevice,

double fTime, float fElapsedTime,

void* pUserContext )

{

// Clear render target and the depth stencil

float ClearColor[4] = { 0.176f, 0.196f, 0.667f, 0.0f };

pd3dDevice->ClearRenderTargetView( DXUTGetD3D10RenderTargetView(), ClearColor );

pd3dDevice->ClearDepthStencilView( DXUTGetD3D10DepthStencilView(), D3D10_CLEAR_DEPTH,1.0,0 );

//render stats

RenderText10();

}

Here you see the clearing of the render target and depth/stencil buffers and then the render of the FPS text and Device text using helper method RenderText10.

Now its time to clean up. In this simplistic example, OnD3D10ReleasingSwapChain does nothing:

//------------------------------------------------------------------

// Release D3D10 resources created in OnD3D10ResizedSwapChain

//------------------------------------------------------------------

void CALLBACK OnD3D10ReleasingSwapChain( void* pUserContext )

{

}

In OnD3D10DestroyDevice the font, sprite, and texthelper objects are released:

//------------------------------------------------------------------

// Release D3D10 resources created in OnD3D10CreateDevice

//------------------------------------------------------------------

void CALLBACK OnD3D10DestroyDevice( void* pUserContext )

{

//HUD

SAFE_RELEASE( g_pFont10 );

SAFE_RELEASE( g_pSprite10 );

SAFE_DELETE( g_pTxtHelper );

}

Handlers

Next we have a message handler, MsgProc. It currently does nothing:

//------------------------------------------------------------

// Handle messages to the application

//------------------------------------------------------------

LRESULT CALLBACK MsgProc( HWND hWnd, UINT uMsg,

WPARAM wParam, LPARAM lParam,

bool* pbNoFurtherProcessing,

void* pUserContext )

{

return 0;

}

There are two other handlers that may come up, OnKeyboard and OnMouse. They too are currently shells:

//--------------------------------------------------------------------------------------

// Handle key presses

//--------------------------------------------------------------------------------------

void CALLBACK OnKeyboard( UINT nChar, bool bKeyDown, bool bAltDown, void* pUserContext )

{

}

//--------------------------------------------------------------------------------------

// Handle mouse button presses

//--------------------------------------------------------------------------------------

void CALLBACK OnMouse( bool bLeftButtonDown, bool bRightButtonDown, bool bMiddleButtonDown,

bool bSideButton1Down, bool bSideButton2Down, int nMouseWheelDelta,

int xPos, int yPos, void* pUserContext )

{

}

WinMain

The next big component of the sample is the WinMain method:

//------------------------------------------------------------------

// Initialize everything and go into a render loop

//------------------------------------------------------------------

int WINAPI wWinMain( HINSTANCE hInstance,

HINSTANCE hPrevInstance,

LPWSTR lpCmdLine, int nCmdShow )

{

// Enable run-time memory check for debug builds.

#if defined(DEBUG) defined(_DEBUG)

_CrtSetDbgFlag( _CRTDBG_ALLOC_MEM_DF _CRTDBG_LEAK_CHECK_DF );

#endif

// DXUT will create and use the best device (either D3D9 or D3D10)

// that is available on the system depending on which D3D callbacks are set below

// Set general DXUT callbacks

DXUTSetCallbackFrameMove( OnFrameMove );

DXUTSetCallbackKeyboard( OnKeyboard );

DXUTSetCallbackMouse( OnMouse );

DXUTSetCallbackMsgProc( MsgProc );

DXUTSetCallbackDeviceChanging( ModifyDeviceSettings );

DXUTSetCallbackDeviceRemoved( OnDeviceRemoved );

// Set the D3D9 DXUT callbacks. Remove these sets if the app doesn't need to support D3D9

DXUTSetCallbackD3D9DeviceAcceptable( IsD3D9DeviceAcceptable );

DXUTSetCallbackD3D9DeviceCreated( OnD3D9CreateDevice );

DXUTSetCallbackD3D9DeviceReset( OnD3D9ResetDevice );

DXUTSetCallbackD3D9FrameRender( OnD3D9FrameRender );

DXUTSetCallbackD3D9DeviceLost( OnD3D9LostDevice );

DXUTSetCallbackD3D9DeviceDestroyed( OnD3D9DestroyDevice );

// Set the D3D10 DXUT callbacks. Remove these sets if the app doesn't need to support D3D10

DXUTSetCallbackD3D10DeviceAcceptable( IsD3D10DeviceAcceptable );

DXUTSetCallbackD3D10DeviceCreated( OnD3D10CreateDevice );

DXUTSetCallbackD3D10SwapChainResized( OnD3D10ResizedSwapChain );

DXUTSetCallbackD3D10FrameRender( OnD3D10FrameRender );

DXUTSetCallbackD3D10SwapChainReleasing( OnD3D10ReleasingSwapChain );

DXUTSetCallbackD3D10DeviceDestroyed( OnD3D10DestroyDevice );

// Perform any application-level initialization here

//start main processing

// Parse the command line, show msgboxes on error, no extra command line params

DXUTInit( true, true, NULL );

DXUTSetCursorSettings( true, true ); // Show the cursor and clip it when in full screen

DXUTCreateWindow( L"D3D10Maze-Base" );

DXUTCreateDevice( true, 800, 600 );

DXUTMainLoop(); // Enter into the DXUT render loop

// Perform any application-level cleanup here

return DXUTGetExitCode();

}

Now that we have that basic structure defined, let’s go back and define the D3D9 code ( globals and callbacks ) and finish off our discussions of this “skeleton” app.

Globals-D3D9

The D3D9 code is very similar but shares the DXUTTextHelper object and the global declarations reflect that:

ID3DXFont* g_pFont9 = NULL;

ID3DXSprite* g_pSprite9 = NULL;

extern CDXUTTextHelper* g_pTxtHelper;

Callbacks-D3D9

In the OnD3D9CreateDevice method we handle long-lived resource creation:

// Create D3D9 resources (D3DPOOL_MANAGED)

// and aren't tied to the back buffer size

//------------------------------------------------------------------

HRESULT CALLBACK OnD3D9CreateDevice( IDirect3DDevice9* pd3dDevice,

const D3DSURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

HRESULT hr;

V_RETURN( D3DXCreateFont( pd3dDevice, 15, 0, FW_BOLD, 1, FALSE, DEFAULT_CHARSET,

OUT_DEFAULT_PRECIS, DEFAULT_QUALITY, DEFAULT_PITCH FF_DONTCARE,

L"Arial", &g_pFont9 ) );

return S_OK;

}

For OnD3D9ResetDevice we create any resources that do not live through a device reset:

//------------------------------------------------------------------

// Create D3D9 resources (D3DPOOL_DEFAULT)

// or that are tied to the back buffer size

//------------------------------------------------------------------

HRESULT CALLBACK OnD3D9ResetDevice( IDirect3DDevice9* pd3dDevice,

const D3DSURFACE_DESC* pBackBufferSurfaceDesc,

void* pUserContext )

{

HRESULT hr;

if( g_pFont9 ) V_RETURN( g_pFont9->OnResetDevice() );

V_RETURN( D3DXCreateSprite( pd3dDevice, &g_pSprite9 ) );

g_pTxtHelper = new CDXUTTextHelper( g_pFont9, g_pSprite9, NULL, NULL, 15 );

float fAspectRatio = pBackBufferSurfaceDesc->Width / ( FLOAT )pBackBufferSurfaceDesc->Height;

return S_OK;

}

Here we see a bit of difference in how D3D9 and D3D10 treat objects and object lifetimes. So we “refresh” the font by calling its OnResetDevice method and create the Sprite and CDXUTTextHelper here instead of OnD3D9CreateDevice. Remember, that, it’s going to come up again.

The DXUT framework shares one OnFrameMove for both D3D9 and D3D10. That means if there is any device specific time-based processing you need to perform it in the OnD3D9FrameRender method.

//------------------------------------------------------------------

// Render the help and statistics text

//------------------------------------------------------------------

void RenderText()

{

g_pTxtHelper->Begin();

g_pTxtHelper->SetInsertionPos( 2, 0 );

g_pTxtHelper->SetForegroundColor( D3DXCOLOR( 1.0f, 1.0f, 0.0f, 1.0f ) );

g_pTxtHelper->DrawTextLine( DXUTGetFrameStats( true ) );

g_pTxtHelper->DrawTextLine( DXUTGetDeviceStats( ) );

g_pTxtHelper->End();

}

OnD3D9FrameRender is similar to the D3D10 version with just the changes required by D3D9:

//------------------------------------------------------------------// Render the scene using the D3D9 device

//------------------------------------------------------------------

void CALLBACK OnD3D9FrameRender( IDirect3DDevice9* pd3dDevice,

double fTime, float fElapsedTime,

void* pUserContext )

{

HRESULT hr;

// Clear the render target and the zbuffer

V( pd3dDevice->Clear( 0, NULL, D3DCLEAR_TARGET D3DCLEAR_ZBUFFER,

D3DCOLOR_ARGB( 0, 45, 50, 170 ),

1.0f, 0 ) );

// Render the scene

if( SUCCEEDED( pd3dDevice->BeginScene() ) )

{

//HUD

RenderText();

V( pd3dDevice->EndScene() );

}

}

In OnD3D9LostDevice we call the font OnLostDevice method, release the sprite, and delete the texthelper:

//------------------------------------------------------------------

// Release D3D9 resources created in the OnD3D9ResetDevice callback

//------------------------------------------------------------------

void CALLBACK OnD3D9LostDevice( void* pUserContext )

{

//HUD

if( g_pFont9 ) g_pFont9->OnLostDevice();

SAFE_RELEASE( g_pSprite9 );

SAFE_DELETE( g_pTxtHelper );

}

//------------------------------------------------------------------

// Release D3D9 resources created in the OnD3D9CreateDevice callback

//------------------------------------------------------------------

void CALLBACK OnD3D9DestroyDevice( void* pUserContext )

{

//HUD

SAFE_RELEASE( g_pFont9 );

}

And we are done.

Here is a screenshot of the app running under D3D10:

Here is a zip folder containing the project.

It has been built using VS 2008.

It has been run on both a desktop PC with a 9800GX2 and a laptop PC with an 8400M. It should work just fine on an ATI part, though, that is just the hardware they gave me and is not any implied endorsement.

Conclusion

There you have it, an app about nothing, to misquote Seinfeld.

This is an important step in this series nonetheless. This app handles entering and leaving full screen, resizing, and closing correctly. The FPS counter displays the frame rate. It responds to the forcexxx command line parameters. Plus the app auto-detects D3D10 or D3D9 support and picks the current runtime.

Even though it really appears to do nothing, it actually does quite a bit.

The next article will add the simple skybox. This one came out relatively quickly; I don’t want to promise a repeat of that so we will see how fast I can turn it around. Stay tuned!

PS:

I apologize for the indentation of the source, it appears Blogger doesnt like leading blanks, and is cutting my tabs or spaces.

Friday, December 5, 2008

Maze Sample : Article 1 - DXUT Framework

Use someone else’s framework and you can get bit by pieces of it you do not understand, pay in terms of overhead for things you do not need, and overcomplexify your life. And make for a harder explanation and harder reading.

Use your own and you end up having to explain and justify everything and risk it not making sense.

Since I am going to have to explain everything anyways, ;-), using the DXUT framework that comes with the DX SDK seems like a reasonable tradeoff since:

- it’s a de-facto standard,

- is well-supported, and

- does mostly what I want.

DXUT Overview

DXUT is semi-documented in the DX SDK. I say that for two reasons:

- The documentation that does exist is in at least two places

- Large swaths of DXUT are undocumented.

If you open up the DX SDK Documentation, go to the TOC, and expand both:

- the Programming Guide->DXUT section and

- the DirectX Graphics->Tutorials and Samples section

Reading those two sections provides you with the basics of DXUT.

The order I see as making the most sense is a little funky, though.

I would suggest starting with “Introduction to DXUT” in the Tutorials section and then moving back to the Programming Guide to pick up the basics like "Create a Window", "Create a Device", "Create a Main Loop", and "Handle Events". Then I would move back to the Tutorials section to pick up Meshes in DXUT and Advanced DXUT. After that I would go back to the Programming Guide and finish with "Handle Errors", "Advanced Device Selection", and "Additional Functions". You see, it’s a bunch of back and forth.

With that reading under your belt, following the flow of the SDK sample code becomes pretty simple and the hard part becomes understanding the implementation details of a particular sample. Which is the putative purpose of a framework, to hoist useful infrastructure but not get in the way too much.

There are still some parts of DXUT that are useful and unexplained though.

For instance, the Additional Functions section mentions mentions a few of the bits and bobs that are available ( not all though ) and then doesn’t go into a lot of detail. So you are forced to fend for yourself.

And the "Advanced DXUT section" mentions one of the camera types ( ModelViewer ) but not the other ( FirstPerson ) even though it does go into good detail on the various 3D UI elements that DXUT provides.

I won’t claim I am going to go into exhaustive detail on all the nooks and crannies but I will explain a couple of bits that aren’t mentioned explicitly in the docs. For instance, I will make use of both camera types at first and then perform "code fusion" and jam the two together to make my own camera type.

Article Series Usage of DXUT

For this article series, most often, we can get away with writing code in six of the callbacks, using just enough of the HUD and UI to render the frame rate, using a MsgProc to process user input for the cameras, using a WinMain, and using the helpers for meshes and cameras. So that is what I will start with.

Callbacks

From the “Introduction to DXUT” Tutorial you learn about callbacks. The primary ones I use are, in D3D10 flavor, and being set using DXUTSetCallbackxxx:

Device Creation and Destruction

DXUTSetCallbackD3D10DeviceCreated( OnD3D10CreateDevice );

DXUTSetCallbackD3D10DeviceDestroyed( OnD3D10DestroyDevice );

Swap Chain resizing

DXUTSetCallbackD3D10SwapChainResized( OnD3D10ResizedSwapChain );

DXUTSetCallbackD3D10SwapChainReleasing( OnD3D10ReleasingSwapChain );

Animating and Rendering

DXUTSetCallbackD3D10FrameRender( OnD3D10FrameRender ); DXUTSetCallbackFrameMove( OnFrameMove );

MsgProc

In addition I use the MsgProc:

DXUTSetCallbackMsgProc( MsgProc );

To allow cameras to handle user input.

WinMain

And WinMain is required to set everything up:

INT WINAPI WinMain( HINSTANCE, HINSTANCE, LPSTR, INT )

{

// Perform any application-level initialization here

}

as well as to allow any custom initialization and resetting the window size and title.

In the next article, when I create the “Base” application I will go through these methods in more detail to set the baseline we will build upon towards getting a maze rendering in a skybox with a couple of cameras, a skinned character, and few more bits and bobs.

Advanced Usage

A goal we will maintain throughout the series is to run clean on the debug runtime. The debug runtime will alert you to API usage errors as well as memory leaks. Fixing those is required as part of being a “clean” app. Running the app on the debug runtime, hitting alt-enter to go full screen, hitting alt-enter again to return to windowed mode, resizing the window, and exiting will be our basic runtime unit test to allow us to verify we handle the basic behaviors without leaking objects.

Another interesting and hidden property of the DXUT framework is its reaction when switching between full screen and windowed modes if you leak memory objects. In this case, you end up with an app with no window frame and a client area only. This serves as a severe indication you need to fix this bad behavior now. While a tad draconian, this is effective and I must say I like it since I religiously believe you must run clean or else you are no professional.

Buried in the SDK is the “EmptyProject10”. The coolest thing about Empty is that it shows how to use the DXUT framework to enable a single app that runs under both D3D9 and D3D10, detecting what is available at runtime and doing the right thing. I will use this capability and write code for both D3D9 and D3D10, but occasionally this will mean slightly different functionality as I will strive to use each API to its fullest and not go for “vanilla” that runs the same everywhere.

Finally, DXUT has a bunch of command line parameters, two of which I use a lot:

ForceVSyc turns vsync on/off. You use this by entering:

-forcevsync:n

where n=0 disables and n=1 enables vsync

into the debugging->command line arguments Project Properties.

ForceAPI lets you force the usage of D3D9 or D3D10, useful to test D3D9 on D3D10 hardware. You use this by entering:

-forceapic:n

where n=9 enables D3D9 and n=10 enables DD10

into the debugging->command line arguments Project Properties.

With that, you know now the components I will base this series on. I believe we are ready to move on to setting the baseline code up.

But that is the next article, patience my young Padawan.

Next Steps

The articles will follow the naming convention “Maze Sample: Article n – subject” so you will know from the blog post title what article you are about to get. That will also allow me to interleave other posts if something comes up while I am on this roll. So this article title is “Maze Sample: Article 1 – DXUT Framework” in conformance with the naming convention.

Expect roughly one article a week, and please do allow me time off for the holidays. Oh, and I am taking vacation Jan 5-12 early next year, heading to Mexico to escape the dreary Seattle weather. So that will have an impact on the article schedule too.

I hope you find this set of articles useful.

Posts

Posts